In the previous post we outlined the importance of The Data and Product loop and the importance of a foundational Data Platform. However it’s easy to get lost today in the myriads of technical jargon used to describe a Data Platform. From creating new categories to rebranding old categories as “modern” there is no end to it.

While it’s great for the overall ecosystem in that new data startups are created carving a niche for themselves and then get acquired by a larger player but for the users of the platform this only means more silos internally and wasted time and resources.

⚠️ The non goals of this article is explaining technical jargon used today so you wouldn’t find terms like data mesh, data contract, headless BI, modern data stack in here or any specific vendor tools.

✅ The goal of this article is to focus on the fundamentals of building a Data Platform, the common pitfalls and tangible deliverables to inspire deeper thinking agnostic to tooling.

With that said let’s get started..

Fundamentals of a Data Platform

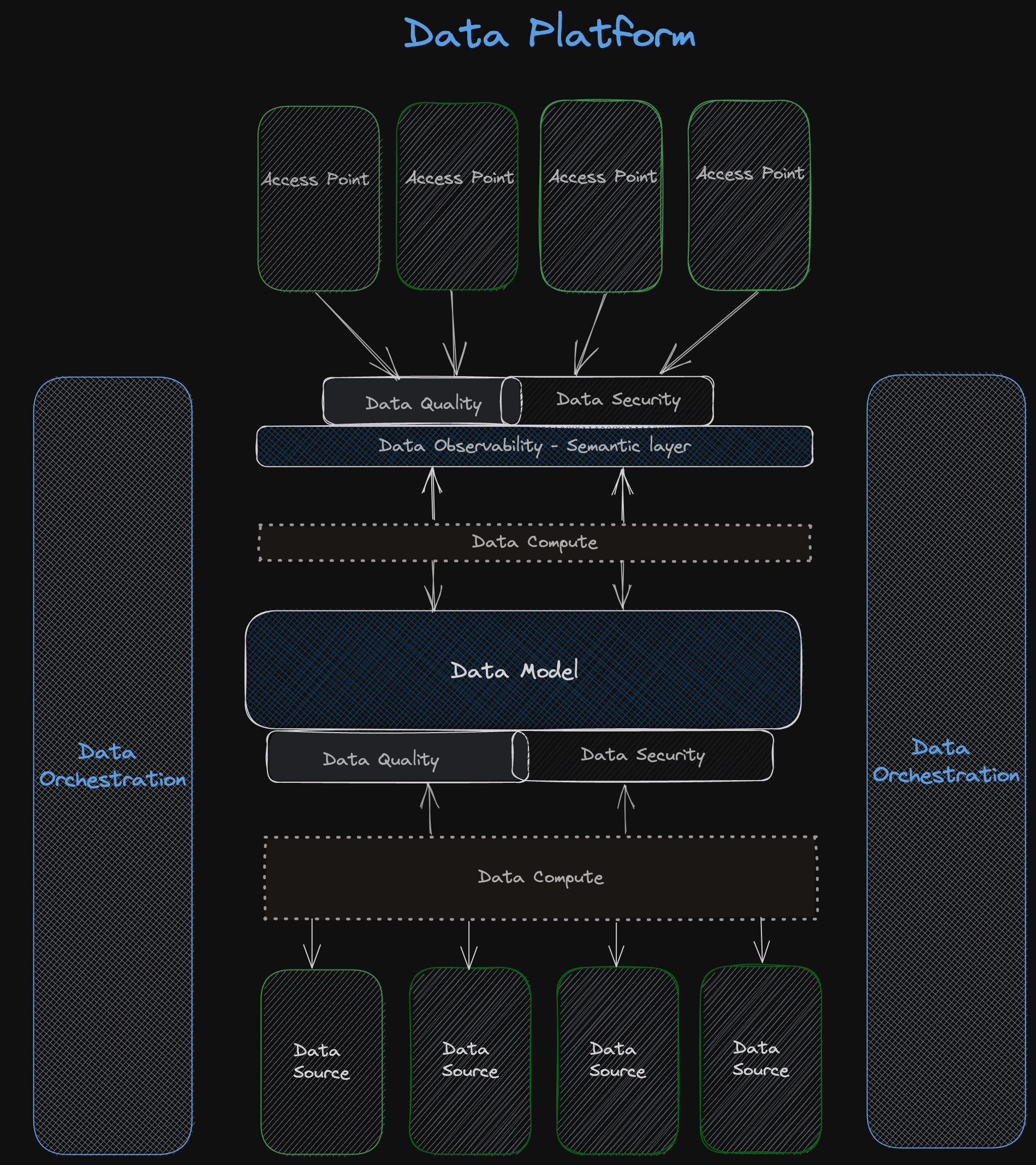

When you zoom out enough, a successful Data Platform encompasses six areas -

🧠 Data Modeling

💠 Data Quality

🚚 Data Compute

🎶 Data Orchestration

👀 Data Observability

🔐 Data Security

🧠 Data Modeling 🧠

Data Modeling is the art of modeling the core user journeys within a product into a concise set of data artifacts, aka data model. Data model is the brain of the data platform that guarantees consistency in access, provides simple answers to fundamental questions, remembers the past and is prepared for the future.

It is a lost art in today’s “modern” data stack that puts the spotlight on the technology that incentivizes companies to invest in the tooling first and then work backwards to the data model. Crafting the right data models early on provides clarity to the kind of tools and technology required for any data organization.

It is important to remember data modeling doesn’t start in the data warehouse, it must start with defining your event schemas that map to your user journeys.

Deliverable: A core data warehouse containing canonical measures and dimensions and data lineage.

💠 Data Quality 💠

Data is always going to be messy and anomalous no matter how hard you try to avoid it. Bot traffic, event logging misfires, delayed data, code bugs are inevitable and you just have to live with it.

The role of Data Quality in the platform is to identify these anomalies before it reaches your consumers (internal or external) with actionable alerts → notify downstream consumers and annotate dashboards and status page.

Two common pitfalls here are non actionable alerts and treating it as a dumping ground and non contextual alerts which makes it impossible to triage in a timely fashion. It is imperative to treat Data Quality issues as a SEV0 incident.

Deliverable: First invest in understanding your data - what does quality mean to your consumers, what are things that can go wrong in the data flow and where the blindspots are. From there invest in a framework that lets you define quality checks, inferential or predefined and alerts, fatal or non-fatal for your data artifacts. Data artficacts must have a clear lineage and ownership when it comes to root cause analysis.

🚚 Data Compute 🚚

Data Compute is all about getting the data flowing in and out of the data platform. This is where the data model is transformed to code and application logic and hence most vulnerable to bugs and tech debt accumulation. E.g. Computing Daily Active Users, Retention Cohorts, Feeding data back into the Product, etc

Data compute spans from data ingestion to delivery and can have a very large footprint in the data platform as the Product matures and grows and typically is powered by 1+ programming languages. There are two kinds of Data computation flows: streaming and batch. Streaming flow is ingesting a constant event stream of data from your application (logins, impressions, click stream, checkouts) and emitting a stream of processed data. Batch flow is ingesting data at some predefined cadence (hourly dumps of transaction database logs, daily file uploads, third party data, etc) and processing them in batches.

Two common pitfalls here are building without immutability and idempotency guarantees, i.e. if I rerun the compute I should get the same results and over engineering the solution with paradigms that make readibility hard. This is manifested from building layers of brittle abstraction or building standalone solutions. Pairing data ingestion flows with your data orchestration platform yields better consistency within the data platform.

Deliverable: Work backwards from your desired Data Model and pick a programming model that unifies both streaming and batch compute modes. Pairing data compute with data orchestration yields better consistency within the data platform

🎶 Data Orchestration 🎶

Data Orchestration is the conductor responsible for controlling how data moves across the platform. As the Product matures there will be several inflows (marketing data, email data, transactional data, event streams) and outflows (experiment platform, BI, transactional systems, executive dashboards) of the data.

To simulate a scenario of data moving across the platform, each such outlet can be thought of as a data node that is connected together to form a directed acyclic graph (DAG), referred to as a data pipeline. The fundamental responsibility of a data orchestration platform is the ability to author, schedule and execute a data pipeline that guarantees idempotency. By having clean abstractions to implement and reference a data node with a dependency chain you can streamline how data pipelines are built into a managed set of resources.

One common pitfall here is not focusing enough on the developer experience, specifically the testing environment that offers a clean namespace for testing code and shipping with confidence.

Deliverable: Research off the shelf tools and drive early consensus on picking the right tool. The right tool should be able to interlink all data nodes. Avoid scenarios of using multiple data orchestration tools

👀 Data Observability 👀

Data Observability is the eyes of the data platform. It provides a semantic layer to the data model by translating data access patterns into combinatorial pairs of subjects, metrics and dimensions.

- metric:Daily Active subject:Users in dimension:Asia

- metric:App Installs subject:Devices on dimension:android

- metric:Impressions subject:Page

By treating metrics as a first class citizen, data can be truly democratized to every one in the organization, both technical and non-technical audience and ensure strong data governance practices. The fundamental responsibility of a Observability Platform is to take complex business logic and information overloaded data artifacts and distill them down into an intuitive format. It takes a highly collaborative cross functional team (Engineering, Data Science, Product, Research and other stakeholders) to design and build Data Observability the right way.

Invest early in understanding your core user journeys and designing the right metrics and dimensions. This should go hand-in-hand while designing your data model. Don’t just go by the industry defined metric definitions and outsource your metric creation to a closed tool at the outset. It is important to have some room for customizations because chances are you won’t get your metrics right early on and it will need several iterations. Remember a tool is always a means to an end and it should fit your use case and not the other way round.

Deliverable: A repository of materialized canonical and certified metrics with consistent APIs and interfaces for definition and access.

🔐 Data Security 🔐

Data Accessibility is the backbone of the data platform. It provides the technology to guarantee consumer data is protected and in compliance to user choices. It is no secret that data privacy laws are getting stricter and complicated and important for companies to be prepared and ironically it is also one of the easily overlooked problems.

Some examples scenarios

- de-anonymizing all kinds of personally identifiable information (PII)

- wiping out deleted user data per retention policies.

- restricting access to financial data in public companies.

Unlike transactional systems, a data platform stores historical data which makes it extra challenging to retroactively apply these rules across the entire storage footprint. The complexity in data accessibility rules evolves as the company matures but it is important for companies to invest early. Delaying such investments will only lead to a fragmented/band-aid solutions that is disconnected from the core data platform that will in-turn slow down developer efficiency and stakeholders trust in the platform.

Deliverable: Middleware that intercepts all data access and storage requests and pass it to a rules based engine that then routes the request appropriately.